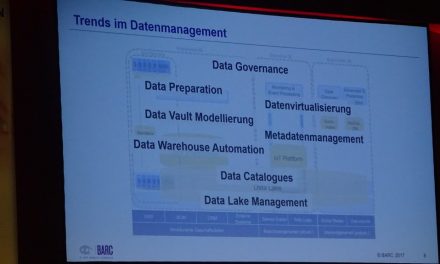

The ultimate goal in Data Warehousing was (is) the single version of the truth” for a long time. An integrated and cleansed version of the information is still subjective and changes over time. Data Vault or Data Lakes with schema-on-read postulate to store all data in its original, raw form instead. The goal is to provide all the data, all the time as a “single version of facts.” Similarly, Metadata Management delivered (delivers) deeply engineered artifacts to describe the desired truth based on the waterfall methodology.

The ultimate goal in Data Warehousing was (is) the single version of the truth” for a long time. An integrated and cleansed version of the information is still subjective and changes over time. Data Vault or Data Lakes with schema-on-read postulate to store all data in its original, raw form instead. The goal is to provide all the data, all the time as a “single version of facts.” Similarly, Metadata Management delivered (delivers) deeply engineered artifacts to describe the desired truth based on the waterfall methodology.

Joe Hellerstein (UC Berkeley) gave a talk on “Big Data at a crossroads: Time to go meta (on use)” at Strata + Hadoop World New York 2015. His thoughts are the base for the blog post.

Metadata & schema-on-read/write

What is metadata? Metadata is data about data. It used to be so simple with RDBMS and defined schemas. Tables, columns, and data types are obvious. Variety creates the biggest challenge to derive metadata on-the-fly. What about schema-on-read? Schema-on-read applies the schema when data is retrieved. Data can be stored in CSV files, JSON files, etc. A log file, for example, contains timestamps or error text. What is the data and what is the metadata? The same file contains both information mixed. Is the timestamp data or metadata? Or is there no metadata in the file and you have to deduce it?

Different usage scenarios will have different views on metadata. There is no single version of truth anymore. Multiple interpretations of the same data must be possible. Metadata must be immutable and versioned.

Metadata-on-use

The work with data produces a lot of metadata. For example, a data scientist wrangles + analyzes + visualizes data to get new insights. In the end, the data scientist produces a data pipeline with input data, a model, and output data. But all the insights during his work are gone – including mistakes. “You will never know your data better than when you are wrangling and analyzing it,” says Joe Hellerstein. The community needs to write things down. Tools are not useful in capturing how people interact and work with data. Jupyter Notebooks are a positive example going in the right direction.

A recreation of a data pipeline is only possible if details are known as, e.g., version or configuration settings (Interoperability, Interpretation, Reproducibility). Understanding of results is dependent on metadata:

- how data is collected

- how data is wrangled

- how the model is built.

Data Catalog with metadata services and APIs

Governance is about policies like access, availability, integrity, and security of data. A governance approach can be by prevention or by an audit: forbid or check later. Instead of dictating policies from above, Hellerstein favors a policy that is based on broad agreement and collective intelligence.

Hellerstein demands open metadata standards across software components. Open metadata repository services must be available to access a data catalog. He describes the potential as phenomenal to enable collective intelligence. Collaboration across departments instead of working within silos. Sharing of knowledge instead of hoarding knowledge. Hellerstein and his team at Berkely university created an open-source, vendor-neutral data context service called Ground. Data context is all of the information surrounding the use of data in an organization:

- what data an organization does have

- who in the organization is using which data

- when and how data is changing

- to and from where the data is moving.

Open metadata is still an illusion in the industry today. Tool vendors like Alation, Collibra, Oracle, Datum, Informatica, etc. have their proprietary formats. Some vendors offer rich APIs to write and read data from the catalog. Gartner calls the data catalog as the new black in data management. The Gartner Magic Quadrant for Metadata Management solutions 2017 can be found here.

Google uses a crawler to collect data from its internal systems. Google described their data catalog GOODS (Google Dataset search) in a white paper. The blog post GOODS – How to post-hoc organize the Data Lake contains a summary.

Metadata is created through agile processes and needs to be managed in new ways. Collaboration and automation will be essential. Google GOODS shows that the amount of metadata can be huge and the processing very time-consuming.

Trackbacks/Pingbacks